The BYD Tang DM-i Intelligent Driving Edition with 175KM long-range has just hit the market! At first glance, it's still the familiar large seven-seater SUV, but it perfectly connects all the &quo

Read More

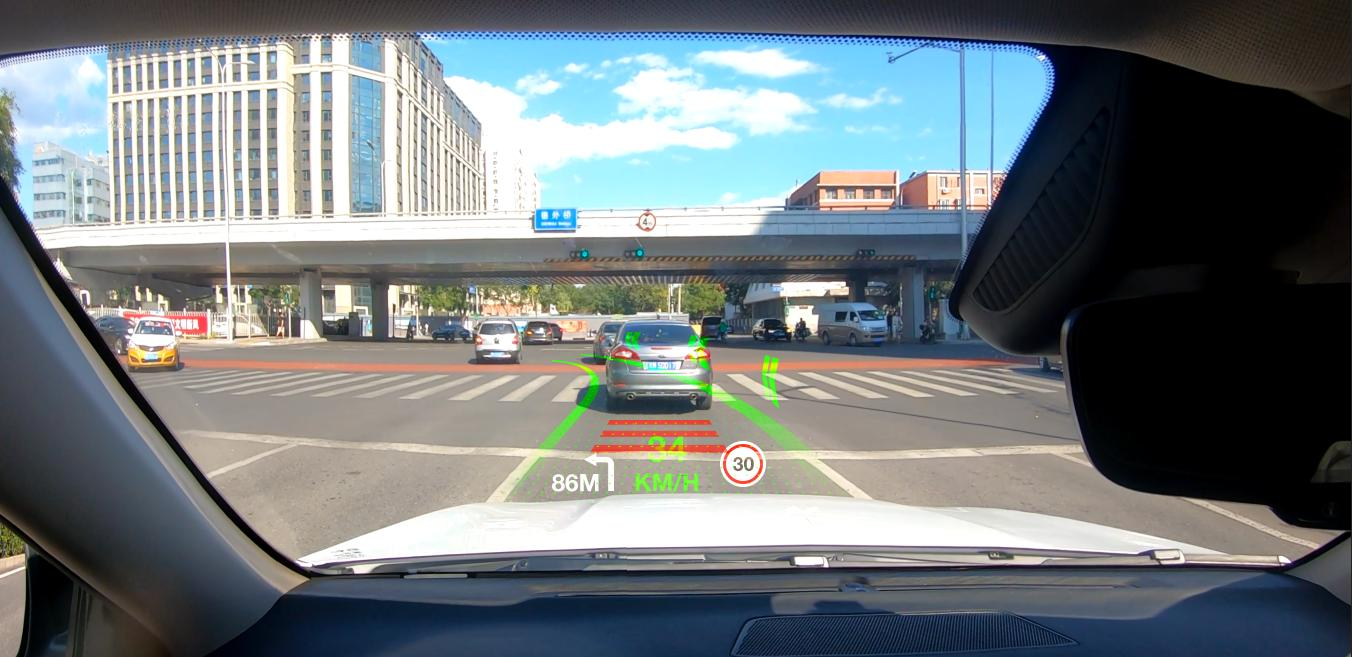

Ever glanced down at your navigation and suddenly realized you’re veering off course? This heart-stopping moment highlights a fatal flaw in traditional driving displays. According to NHTSA, around 80% of collisions involve such distractions [1]. Traditional HUDs only worsen the issue—blurry projections that fade in sunlight, floating navigation arrows misaligned by 15cm, and 200ms delays causing motion sickness [2][3].

Traditional displays suffer from three critical flaws: poor visibility (washed-out in sunlight, ghosting at night), motion discomfort (delayed visuals causing eye strain), and disconnected guidance (virtual arrows floating above real roads). These aren’t just annoyances—they’re safety hazards [5].

Xiaopeng’s AR-HUD isn’t just an upgrade—it’s a complete rethink, merging Huawei’s precision hardware with Xiaopeng’s AI smarts to keep your eyes on the road, not screens.

Think of traditional HUDs as drawing stick figures on foggy glass—Xiaopeng’s AR-HUD is like 3D-printing directions directly onto the pavement. This leap from "floating info" to "grounded guidance" solves the age-old frustration of arrows that drift on hills or wobble over bumps.

Huawei’s 10-billion-yuan optical investment delivers an 87-inch display spanning multiple lanes, 12,000 nits brightness (visible in direct sunlight), and 1,800:1 contrast for night clarity [3]. A 10-meter virtual distance and ≤1% distortion (industry avg: 3-5%) mean projections align naturally with your vision [9].

Xiaopeng’s secret? "Road slope curve fitting"—creating a 3D digital twin of the pavement so arrows adhere to real contours, not float above them. Paired with 3D OCC technology and 100ms latency, what you see matches real-time road conditions [3].

Xiaopeng AR-HUD rendering process: grid points forming a slope curve

Key Fixes for Drivers

• No more floating arrows—slope algorithms anchor guidance to actual road contours

• See clearly always: 12,000 nits cuts through sunlight; 1,800:1 contrast sharpens night visibility

• Zero lag: 100ms response ensures visuals match real-world speed

This 87-inch "digital co-pilot" paints green paths directly onto roads, with red "X"s blocking wrong turns at complex intersections—reducing wrong-way incidents by 67% [9]. Unlike competitors that glitch on bumps, Xiaopeng’s frame-smoothing keeps arrows steady within 0.5°—like a gimbal for your navigation [14].

AR-HUD light carpet effect showing green path贴合 road

Ever wished your car could "explain" its next move? This system turns driving decisions into visual stories: ripples signal "waiting for lane gap" 1.5s before merging; stretching arrows mean "accelerating now." Covering 6 scenarios (traffic stops to parking paths), it boosts trust by 75% [14].

Rain, fog, or glare? 12,000 nits brightness (3x brighter than traditional HUDs) and 1800:1 contrast highlight lane lines and前车 contours when visibility drops [16]. It pre-warns of "ghost probes" (sudden pedestrians) in 1.5s, turning chaos into clarity.

Imagine driving through a Shanghai rainstorm—wipers can’t keep up, but AR-HUD overlays glowing lane lines and highlights the car ahead in red. You stay centered, no white-knuckling the wheel. This isn’t just cool tech—it’s everyday safety [2].

For Chongqing’s Huangjuewan Overpass (15 ramps, 8 directions), the dynamic light carpet guides drivers with glowing paths and exit markers. "It’s like having a co-pilot drawing directly on the road," says local driver Ms. Wang. Tests show AR navigation cuts errors by 45% and reduces eye-off-road time by 70% [3].

AR-HUD showing virtual lane markers on foggy road

In emergency scenarios—like a cyclist darting from parked cars—the system flashes red warning frames 0.3s faster than traditional alerts, giving drivers critical reaction time [9]. These aren’t just specs—they’re split-second saves.

Drivers using AR-HUD reduce eye-off-road time by 43% and improve emergency braking speed by 27% [10]. From rain-slicked highways to confusing interchanges, it turns stress into confidence.

G7 owners rave: "AR navigation is so accurate, I never get lost now," one driver posted, calling it "better than the G6’s instrument panel" despite minor light carpet delays [17]. 87% of pre-order buyers listed AR-HUD as a top reason for purchase [7].

The少数 complaints? Some needed time to adjust: "City traffic felt overwhelming at first—like learning new glasses," admitted one tester [19]. A small group with astigmatism reported clarity issues, while others wanted more display customization [19].

Xiaopeng is addressing these with OTA updates—smoothing early light carpet delays and planning brightness/contrast tweaks [17]. For most, though, the verdict’s clear: "It’s not just a gadget—it’s like having a co-pilot who never gets tired," summed up one owner [18].

Tomorrow’s AR-HUD won’t just navigate—it could highlight your friend’s house with a virtual flag or warn of potholes ahead. By 2025, AR-HUD 3.0 will project 10+ meters ahead with multi-focal tech, eliminating dizziness [22]. Eventually, your entire windshield could become a smart display where passengers interact with AR content too [3].

This matters beyond flashy tech. When your car highlights a pedestrian in red or shows exactly where it will steer, you don’t just use autonomous features—you trust them. That trust will make self-driving cars mainstream [1].

By 2026, Xiaopeng plans to equip every model with AR-HUD, turning premium tech into standard fare [3]. This isn’t just upgrading displays—it’s creating a new language between drivers and cars.

Next time you’re stuck in traffic, imagine if your windshield didn’t just show the road—it guided you through it. That future isn’t coming—it’s here, and it’s called Xiaopeng AR-HUD. From eliminating dangerous downward glances to turning confusing interchanges into clear paths, this technology doesn’t just change how we drive—it makes every journey safer, calmer, and a little more magical.

The BYD Tang DM-i Intelligent Driving Edition with 175KM long-range has just hit the market! At first glance, it's still the familiar large seven-seater SUV, but it perfectly connects all the &quo

Read More

The world record is right in front of us! The Yangwang U9 Xtreme+ has achieved a top speed of 496.22 km/h on the test track. What does this number mean? As we mentioned before, the Bugatti Chiron

Read More